Principal Curvature Aligned Anisotropic Shading

In this post I will discuss the math and code behind a method to generate the automated orientation of a shading vector field using the intrinsic geometric properties of the object.

Background

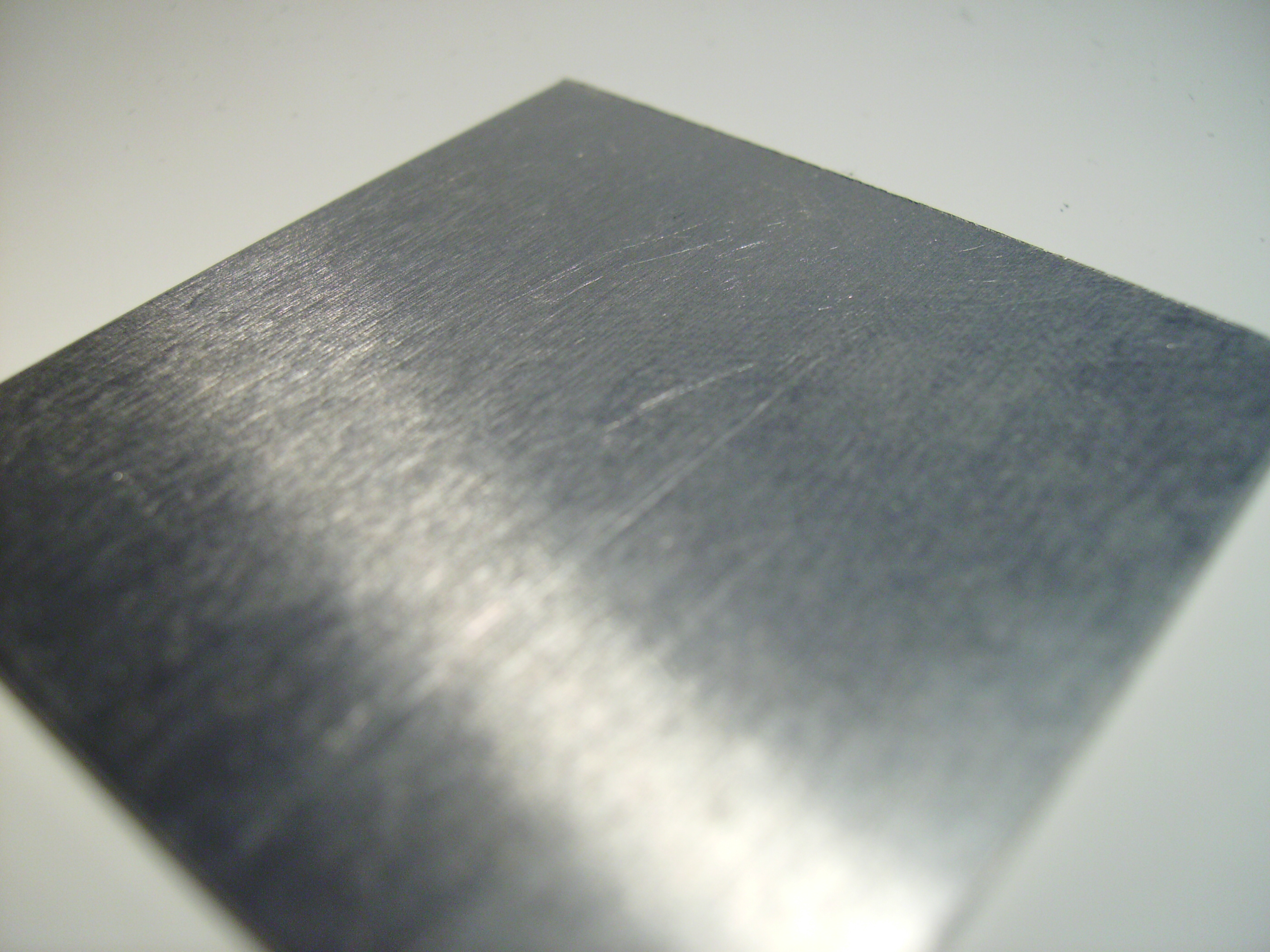

Anisotropic reflectance models are handy for modeling shading behaviours at the microfacet level which conform with some sort of oriented field, for example, brushed metal:

The Ward anisotropic specular reflectance model can (after some simplification, for which I unfortunately do not have a clear reference) be stated as: \begin{equation} \frac{(\mathbf{l}\cdot \mathbf{n})}{(\mathbf{v}\cdot \mathbf{n})} \exp \left( -2 \frac{ \left(\frac{\mathbf{h}\cdot\mathbf{x}}{\alpha_x} \right)^2 + \left(\frac{\mathbf{h}\cdot \mathbf{y}}{\alpha_y} \right)^2}{(1+\mathbf{h} \cdot \mathbf{n})}\right), \end{equation} where \(\mathbf{n}\) is the surface normal at a point, $\mathbf{l}$ is the vector from the point to the light source, \(\mathbf{v}\) is the vector from the point to the eye and \(\mathbf{h}\) is the half vector defined as \(\mathbf{h}=(\mathbf{l}+\mathbf{v})/\|\cdot \| \). The important anisotropic bits are the vectors \(\mathbf{x}, \mathbf{y}\), which define the (orthogonal) directions of anisotropy, and \(\alpha_x\) and \(\alpha_y\) define the standard deviation of the surface slope in the \(\mathbf{x}\) and \(\mathbf{y}\) directions respectively. They scale the “amount” of anisotropy in each of the directions. Note that \(\mathbf{x}\), \(\mathbf{y}\), and \(\mathbf{n}\), should define an orthogonal coordinate frame.

This might be implemented in a fragment shader as something similar to this:

vec3 halfwayVector = normalize(lightDirection + viewDirection);

vec3 normalDirection = normalize(FragNormal);

vec3 tangentDirection = normalize(FragK1);

vec3 binormalDirection = normalize(FragK2); // this also works: cross(normalDirection, tangentDirection);

float dotLN = dot(lightDirection, normalDirection);

vec3 diffuseReflection = attenuation * Light.Ld * Material.Kd * max(0.0, dotLN);

float dotHN = dot(halfwayVector, normalDirection);

float dotVN = dot(viewDirection, normalDirection);

float dotHTAlphaX = dot(halfwayVector, tangentDirection) / alphaX;

float dotHBAlphaY = dot(halfwayVector, binormalDirection) / alphaY;

vec3 specularReflection;

if (dotLN < 0.0) // light source on the wrong side?

{specularReflection = vec3(0.0);} else {

// light source on the right side

specularReflection = attenuation * Material.Ks

* max(0.0,(dotLN/dotVN))

* exp(-2.0 * (dotHTAlphaX * dotHTAlphaX + dotHBAlphaY * dotHBAlphaY) / (1.0 + dotHN));

}

fragColor = vec4(specularReflection + diffuseReflection, 1.0);

In order for us to generate good quality anisotropic fields using this formulation we see that we would have to provide per-pixel values for \(\mathbf{x}\), \(\mathbf{y}\), \(\alpha_x\) and \(\alpha_y\) (note that as \(\mathbf{y}\) is orthogonal to \(\mathbf{x}\) and \(\mathbf{n}\) so theoretically this can be deduced). Typically in a shader the vector field would be inferred by interpolation from a texture map containing direction vectors. As this involves a lot of effort, I looked into something a little more automated.

Lines of Principal Curvature

Most interesting surfaces are curved in some way. You’re probably already familiar with the concept of a normal to a surface: if we zoom in enough to a surface (or manifold) it will look pretty flat. The vector defining the orientation of this plane is called a normal at a point on the surface.

Interestingly there are two other vectors that are defined in that tangent plane - one defines the direction and magnitude in which the rate the normal changes across the surface is at a maximum, and other the least - these are called the maximum and minimum principal curvature directions respectively, and are often symbolized by \(\mathbf{k}_1\) and \(\mathbf{k}_2\) respectively.

These vectors are defined at all points over a smooth surface, and seem like a good choice for an intrinsic surface property suitable for defining our shading orientation, i.e. let \(\mathbf{k}_1 = \alpha_x \mathbf{x}\) and \(\mathbf{k}_2 = \alpha_y \mathbf{y}\). However there is a snag - the flow of the principal curvature direction is undefined, e.g. \(\mathbf{k}_1, \mathbf{k}_2\) and \(-\mathbf{k}_1, -\mathbf{k}_2\) are both equally valid principle curvature directions at any point as the frame can be arbitrarily flipped around (which we’ll see later is a bit of a problem).

Computing the Principal Curvature Directions

There are a number of methods to compute principal curvature directions over a triangle mesh. I’m not going to dwell on it here. The library I used for this is libigl which provides a simple header only library based on the math library Eigen. The full explanation of how libigl deduces the principle curvature directions is described here.

The code for loading up a mesh, deducing vertex normals and extracting the principal curvature directions is below:

// Read a mesh from a file into igl

MatrixXfr V;

MatrixXir F;

igl::read_triangle_mesh(filename, V, F);

// Determine the smooth corner normals

MatrixXfr N;

igl::per_vertex_normals(V,F,N);

// Compute the principle curvature directions and magnitude using quadric fitting

MatrixXfr K1,K2;

Eigen::VectorXf KV1,KV2;

igl::principal_curvature(V,F,K1,K2,KV1,KV2);

Note that the KV1 and KV2 hold the magnitude of each vector (K1 and K2 are normalized). This seemed a bit wasteful so I recombined these into a single vertex structure which contained the position, normal and scaled \(\mathbf{k}_1\) and \(\mathbf{k}_2\):

MatrixXfr KV1_mat(K1.rows(), K1.cols()); KV1_mat << KV1,KV1,KV1;

MatrixXfr KV2_mat(K2.rows(), K2.cols()); KV2_mat << KV2,KV2,KV2;

K1.array() = KV1_mat.array() * K1.array();

K2.array() = KV2_mat.array() * K2.array();

// Now concatenate our per vertex data into a big chunk of data in Eigen

MatrixXfr Vertices(V.rows(), V.cols() + N.cols() + K1.cols() + K2.cols());

Vertices << V, N, K1, K2;

Be wary as what these libraries gain in ease of use they sacrifice in terms of performance, so the application takes quite a while on startup to compute these matrices and vectors.

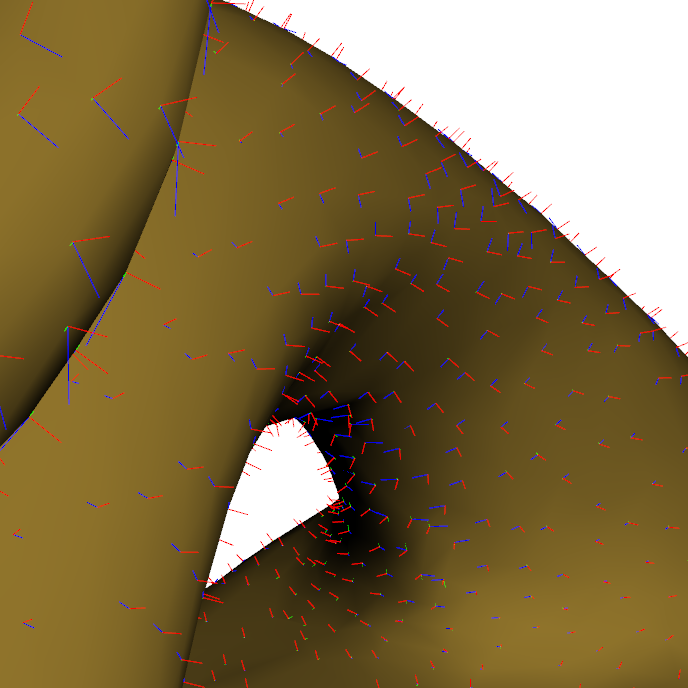

The curvature vectors generated using this process can be visualised as follows:

In this case the coordinate frame at each point is defined by the normal (in red), \(\mathbf{k}_1\), the maximum curvature direction and magnitude (in blue) and \(\mathbf{k}_2\), the minimum curvature direction (in green).

In this case the coordinate frame at each point is defined by the normal (in red), \(\mathbf{k}_1\), the maximum curvature direction and magnitude (in blue) and \(\mathbf{k}_2\), the minimum curvature direction (in green).

Early results

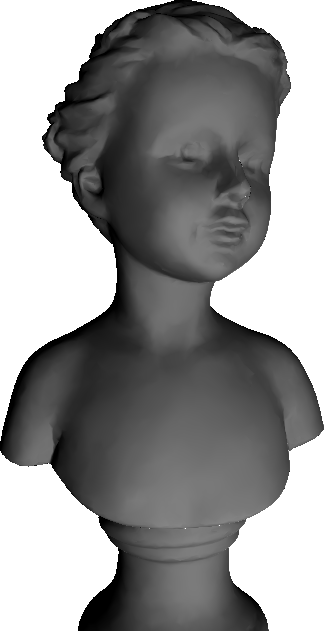

This all sounds like a great idea, but when we render these vectors, we get something a bit unexpected:

Actually, these results are entirely predictable. For a first implementation, the curvatures are defined as vertex properties, which are simply passed on to the fragment shader to shade. This means that the curvatures are being intepolated across the face per fragment.

Actually, these results are entirely predictable. For a first implementation, the curvatures are defined as vertex properties, which are simply passed on to the fragment shader to shade. This means that the curvatures are being intepolated across the face per fragment.

Looking at the curvature directions from the previous section, we can see that the curvature vectors do not define a consistent flow over the surface. This is because the curvature is deduced independently at each vertex, and perfectly valid in either direction of flow, e.g. I could rotate by 180 degrees about the normal and the principle curvature directions are the same.

To the Ward shading algorithm it doesn’t matter, as \(\mathbf{x}\) and \(\mathbf{y}\) are effectively squared so the sign will disappear. However, during rasterization the vectors will be interpolated across the face - the curvature vectors at the corners of a face may be pointing in opposite directions, which means that the interpolated vector will sweep between them.

One option is to re-orient all of the curvature vectors as a preprocess. The only method I’m aware of to consistently orient the curvature field is based on the method described in Anisotropic Polygonal Remeshing by Alliez et al. (also available [here]) but this involves smoothing the tensor field, identifying umbilics and iteratively growing streamlines across the surface, which seemed somewhat tedious.

Curvature Direction Correction on the GPU

One of the convenient properties of the rendering process is that the triangle mesh (which may have shared vertex properties) is essentially broken up into independent triangles for the purposes of rendering as fragments. This effectively means that the vertex properties will be duplicated for each corner of each face coincident on a vertex.

Each face with the vertex properties to be interpolated are passed on to a Geometry Shader before the Fragment Shading stage of the pipeline. At this stage we are free to flip around \(\mathbf{k}_1\) and \(\mathbf{k}_2\) in order to ensure that all the curvature vectors at the corners are consistently oriented for this particular face. Note that which a neighbouring face might have these vectors oriented in a different direction, it doesn’t matter due to the properties of the Ward shading model.

void main() {

// The first triangle vertex is just going to be copied across

gl_Position = gl_in[0].gl_Position;

FragPosition = GeoPosition[0];

FragNormal = GeoNormal[0];

FragK1 = GeoK1[0];

FragK2 = GeoK2[0];

EmitVertex();

for(int i = 1; i < 3; ++i) {

// Copy across the vertex position

gl_Position = gl_in[i].gl_Position;

FragPosition = GeoPosition[i];

FragNormal = GeoNormal[i];

// Determine whether the curvature is flipped, if so, correct the curvature normal

FragK1 = ((dot(GeoK1[0], GeoK1[i]) < 0.0)?-1.0:1.0) * GeoK1[i];

FragK2 = ((dot(GeoK2[0], GeoK2[i]) < 0.0)?-1.0:1.0) * GeoK2[i];

// Emit the vertex of this primitive

EmitVertex();

}

// Finish the triangle (strip)

EndPrimitive();

}

As you can see all this does is copy across the first vertex, and then copy across the other two triangle vertices, but first possibly flipping the curvature vectors if they are oriented differently to the first vertex. Not particularly high-brow stuff, but it gets the job done.

Final Results

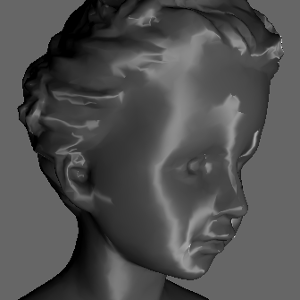

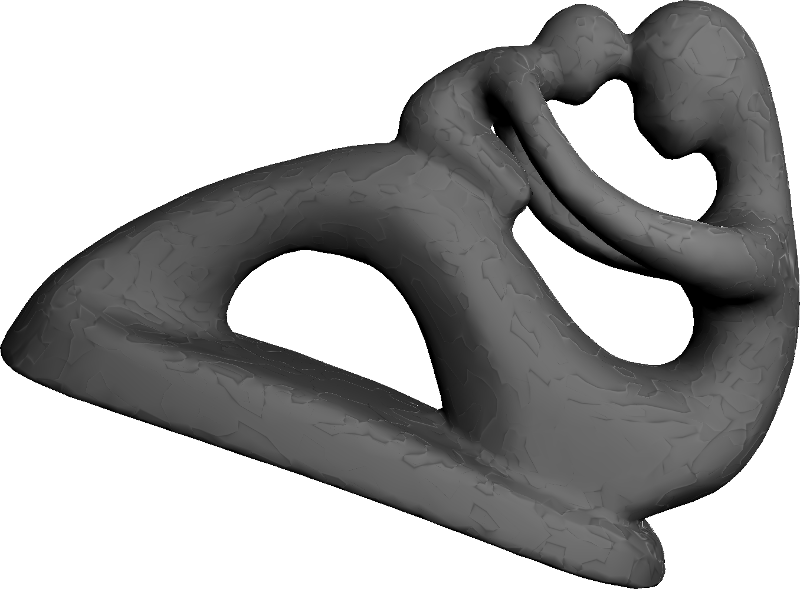

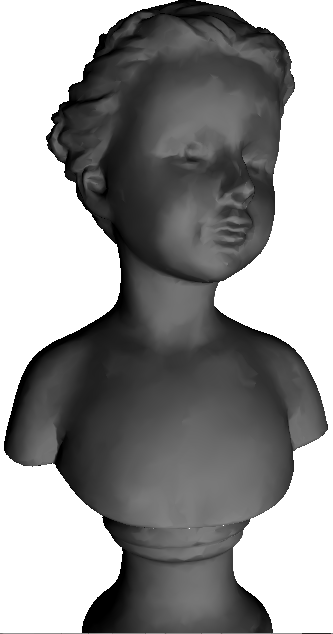

Here is the bust model with three different scalings of the anisotropy aligned to the principle curvature directions:

|  |  |

While the face flipping problem has been fixed, the obvious issue with these results in that the curvature is not particularly smooth, mainly because of the resolution of the model but also because there has been not smoothing of the vector field. There are also issues relating to the placement of umbilics. These things could be improved by performing smoothing as described in this method or using a mesh with a higher resolution. In addition, a method which allows the user to “hide” umbilic points in geometric areas where they won’t be seen would be useful.

Downloads

The source code is hosted on GitHub. Clone it from the repository using the following command from your console:

git clone https://github.com/rsouthern/Examples

This example is under rendering/curv.